Hi there! My name is Jiaang Li (李家昂).

I am currently a PhD candidate at University of Science and Technology of China, supervised by Prof. Yongdong Zhang. and Prof. Zhendong Mao. I also closely collaborate with Quan Wang.

My current research interests lie within knowledge topics about large language models, and I am also researching on knowledge graph representation and reasoning.

My CV can be downloaded here.

📖 Educations

- 2021.09 - 2026.06 (Expected), PhD Student in Information and Comunication Engineering, University of Science and Technology of China

- Supervised by Prof. Yongdong Zhang and Prof. Zhendong Mao

- 2017.09 - 2021.06, Bachelor of Engineering in Automation, University of Science and Technology of China

- Thesis: Learning and Reasoning with Complex Relations in Knowledge Graphs

- Member of Artificial Intelligence (AI) Elite Class.

📝 Publications

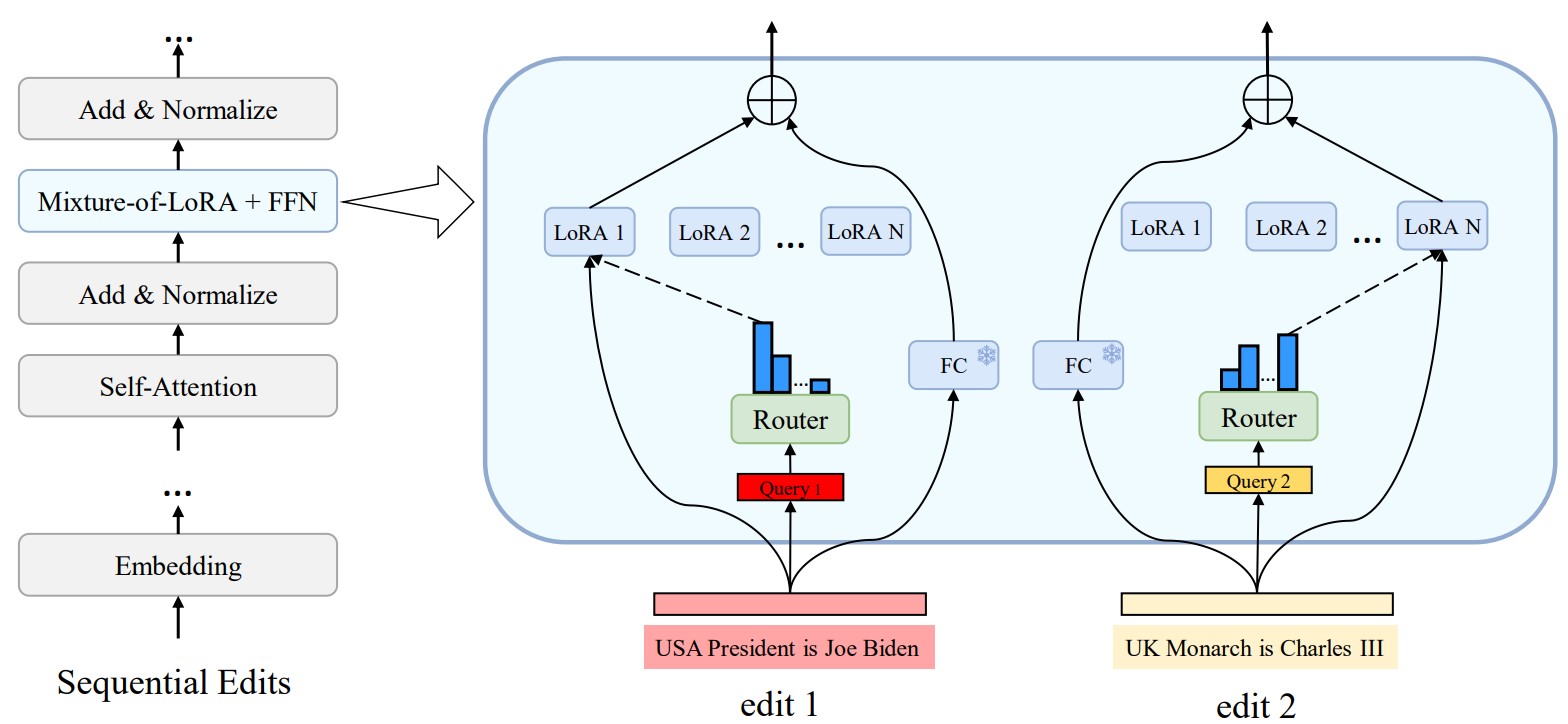

ELDER: Enhancing Lifelong Model Editing with Mixture-of-LoRA

Jiaang Li, Quan Wang, Zhongnan Wang, Yongdong Zhang, Zhendong Mao

- Current Lifelong Model Editing approaches manage sequential edits through discrete data-adapter mappings. They assign a unique adapter for each new edit via key-value mapping, making them sensitive to minor data changes, causing inconsistent outputs and poor generalization.

- In contrast, our method maps each edit to a mixed combination of preset adapters, ensuring minor data changes don’t entirely alter the adapter parameters, enhancing generalization. Experiments on Llama2-7B and GPT2-XL have proven the effectiveness of our method.

- Additionally, our method is scalable by sharing preset adapters across increasing edits, while previous discrete methods require a separate adapter for each edit data.

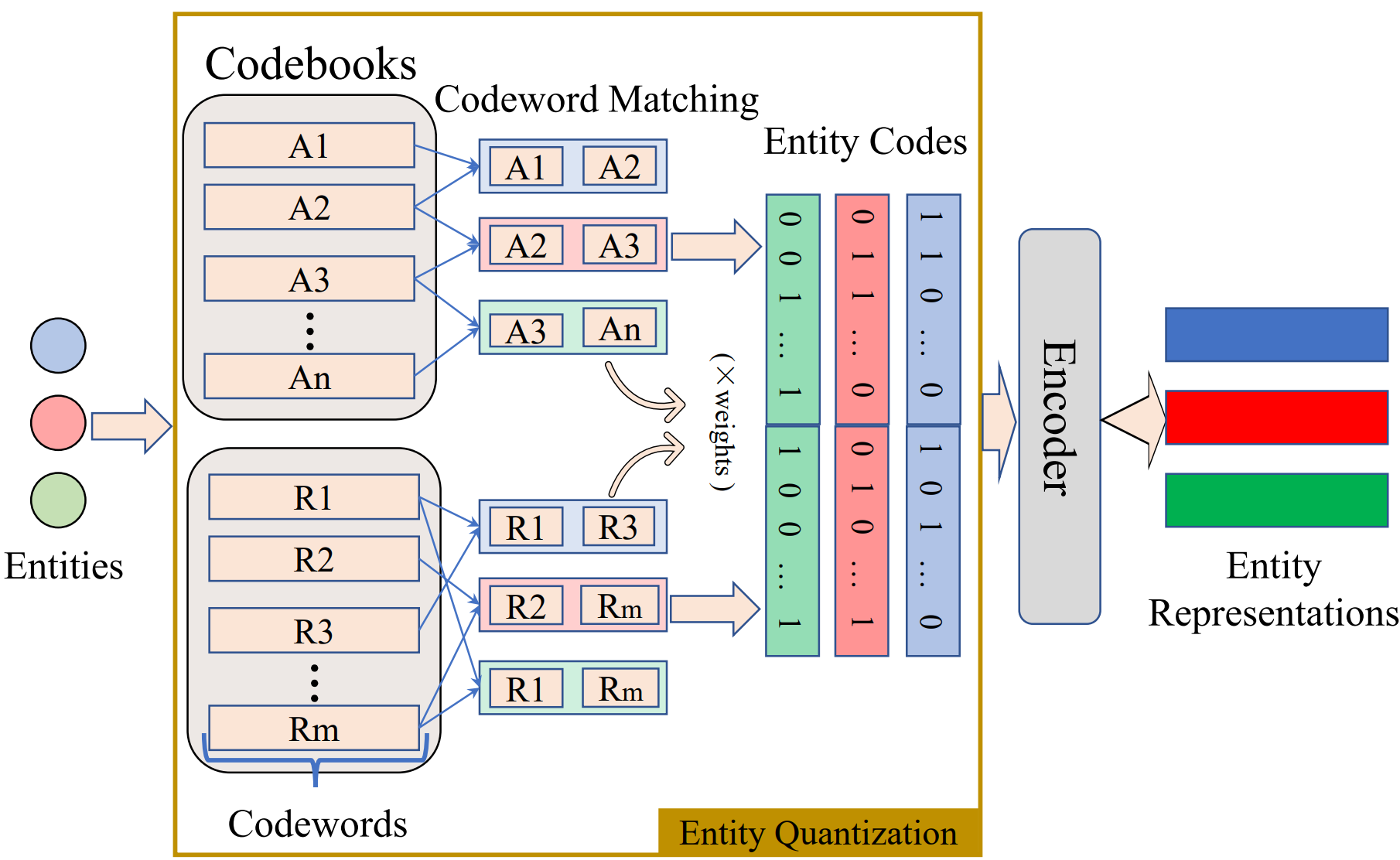

Random Entity Quantization for Parameter-Efficient Compositional Knowledge Graph Representation

Jiaang Li, Quan Wang, Yi Liu, Licheng Zhang, Zhendong Mao

- Knowledge Graph (KG) Embedding represents entities with independent vectors and faces the scalability challenge. Recent studies propose compositional KG representation for parameter efficiency. We abstract the existing works, and define the process of obtaining corresponding codewords for each entity as entity quantization, for which previous works have designed complicated strategies.

- Reveal that simple random entity quantization can achieve similar results to current strategies. Further analyses reveal that entity codes have higher entropy at the code level and Jaccard distance at the codeword level under random entity quantization, which make different entities more distinguishable.

- We prove that the random quantization strategy is already good enough for KG representation. Future work could focusing on improving entity distinguishability from other aspects.

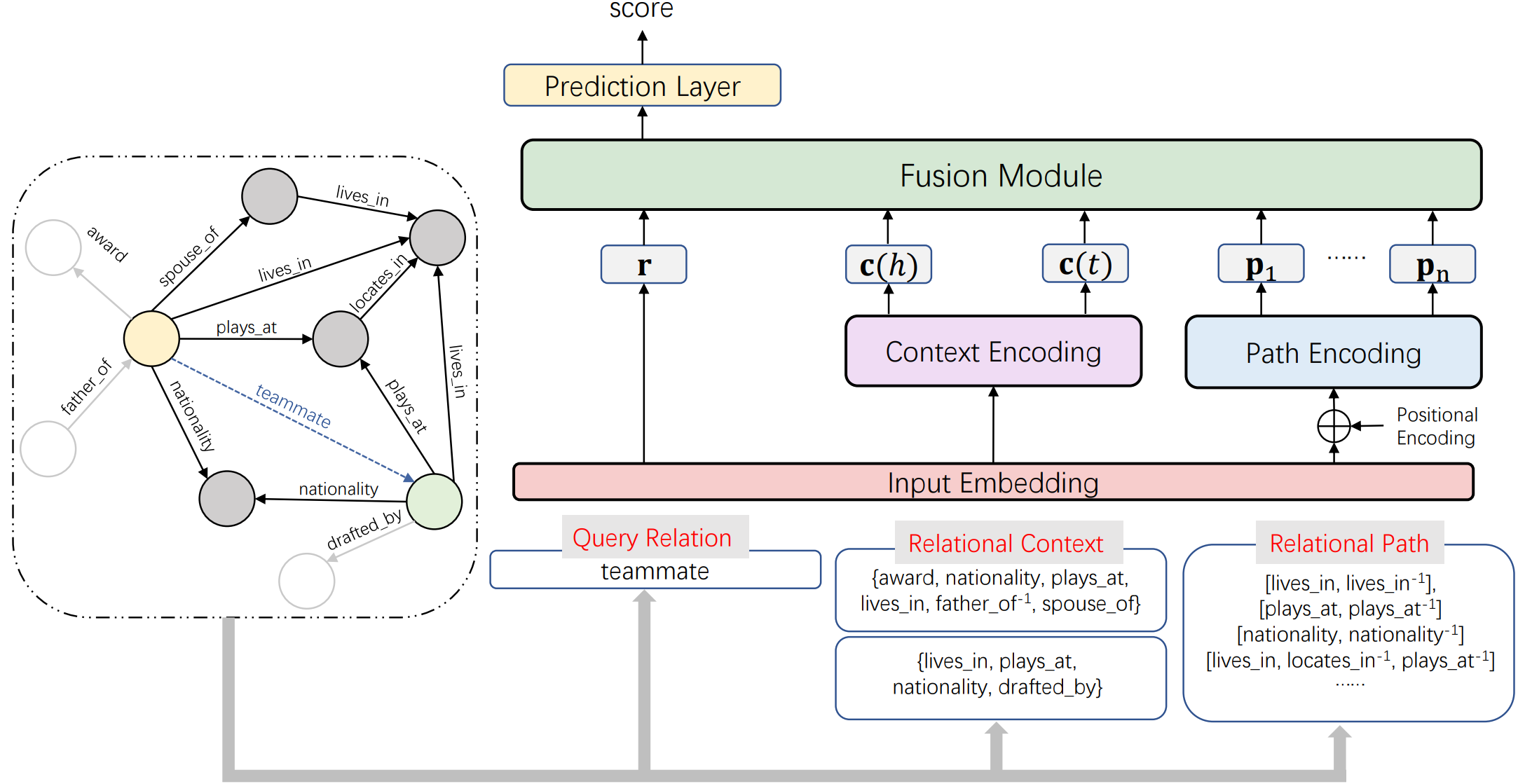

Inductive Relation Prediction from Relational Paths and Context with Hierarchical Transformers

Jiaang Li, Quan Wang, Zhendong Mao

- Propose a method that performs inductive reasoning on Knowledge Graphs and can naturally generalize to the fully-inductive setting, where KGs for training and inference have no common entities.

- Capture both connections between entities and the intrinsic nature of each single entity, by simultaneously aggregating relational paths and context. Use the aggregated upper-level semantics for reasoning to improve model prediction performance.

- Design a Hierarchical Transformer model for path-neighborhood encoding and information aggregation. Our proposed model performs consistently better than all baselines on almost all the eight version subsets of two fully-inductive datasets. Moreover. we design a method to interpret our method by providing each element’s contribution to the prediction results.

🎖 Honors and Awards

- 2023.10 GDC Tech Scholarship.

- 2019.10 Institute of Microsystems, CAS Scholarship.

- 2018 & 2020 USTC Outstanding Student Scholarship.

📚 Experience

- 2023 Spring, Teaching Assistant, Machine Learning and its applications, USTC. (by Prof. Zhendong Mao)

- 2022.9-2023.3, Research Intern, State Key Laboratory of Communication Content Cognition.

- 2020.5-2020.8, Summer Research Intern (Remote), University of California, Santa Barbara. (Hosted by Prof. Yufei Ding).

- 2019.7-2019.8, Summer School in Artificial Intelligence and Robotics, Imperial College London, UK. (Hosted by Prof. Guanzhong Yang, Best Overall Project, [video]).